Let’s think flexibly, remote learning does not have to be segmented into seven assignments a day because learners take seven classes a day.

A Conversation About Education as an Ecosystem

Go Upstream

If you are comfortable with the injustices in our state including systemic poverty and racism, do nothing. If you would like some sustainable change, go upstream, and support our public schools in unlearning so that they can create policies and structures that are truly just and equitable AND ensure they are funded properly.

Five Non-Negotiables in Assessment for Learning

By Gary Chapin

Six or seven years ago, when we were formalizing our approach to "Assessment for Learning" at the Center for Collaborative Education (CCE), we called it Quality Performance Assessment (QPA), meaning performance assessment that achieves technical quality (valid, reliable, sufficient, and free of bias). At the time, we tried to capture the essence of our understanding in a conceptual framework (see figure). Student learning, as always, is at the center, embraced by teacher learning (capacity) and leadership and policy support (conditions). Embedded within and between these, there is an iterative process moving from performance-assessment design to data analysis to aligned instruction and repeat. The graphic is fairly simple, but, six years later, it stands up!

Thoughtful consideration reveals that the QPA framework is founded on a set of assumptions. These five non-negotiable qualities are essential to building a successful performance-assessment system:

Radical Student Engagement: Student learning is the nexus of the framework, and student engagement is the nexus of student learning. Assessments don't tell us what kids know. Assessments tell us what kids are willing to show us that they know. Lack of engagement and lack of knowledge—unwillingness to demonstrate and inability to demonstrate—look virtually identical. A quality performance assessment provides opportunities for learning that are relevant and real for the kid. The best way to do this is by allowing the student to genuinely collaborate in the design and/or execution of the assessment: radical learner agency. At the very least, ask yourself, will kids be more likely to succeed because of the design and structure of your assessment or in spite of the design and structure?

Alignment: Traditionally, validity is an expression of basic alignment. Does the assessment assess the thing you want to assess? Does the learning target align with the instruction rubric, etc.? Alignment has come to mean so much more. Does it align with the student? With their context? With their culture(s)? With their gifts? These are the questions that lead to culturally responsive and anti-bias pedagogies, as well as the primacy of the relationship for learning. These are things that, like everything else on this list, have ceased to be optional.

The Design Cycle: Performance assessments are not static things that you can look at and say, "This is valid. This is not." As much as validity depends on the components of the assessment, it depends also on context and implementation. Is this assessment valid for a particular purpose and a particular kid? Are there entry points for every kid? Similarly, reliability depends on a constant examination, in teams, of what does proficiency look like? The calibration process happens later in the game than we would like it to—because it requires student work—but it is easily the most important protocol going. We've seen untold educators go through a calibration event—examining student work—with eyes wide as the value of performance assessment, and the genius within their students becomes tangibly evident. And then the teachers do it again. And again. Continuous. Forever. Design, implementation, validation, and calibration are iterative and reflective, not linear and closed. Be willing to be surprised within the infinite loop.

Body of Evidence: Quality performance assessments require a quality performance-assessment system in order to be truly effective. The naive days of saying one assessment "proves" mastery are long gone. Assessments provide evidence of learning and, combined with other assessments within a system, lead to the creation of a body of evidence for any particular student. From those many points of evidence, sufficiency determinations can be made (is there enough evidence?), and next steps can be planned. The generative nature of the design cycle becomes obvious over the course of time—the collection of assessment data being more than the sum. The QPA framework informs at all levels—student, classroom, school, district, state, etc. One level cannot function well without the other levels.

The Nurturing Learning Culture: In the framework, the triangle of Student Learning is embraced by the circles of Teacher Learning and Leadership and Policy Support. Like Walt Whitman described in Song of Myself, these two circles are "large [and] contain multitudes." They sum up in a few words many interacting systems. Think of the structural, symbolic, political, and personal dimensions that define a culture (h/t Bolman and Deal). How must this be enacted? Time for PLCs to meet. Freedom for PLCs to determine agendas. Care—in the form, perhaps, of food and recognition—for those teachers who step up. It includes learning for teachers (though not necessarily "training"). It includes book groups, common planning times, space to vent, and the recognition that learning happens one conversation at a time. It requires trust, trustworthiness, and vulnerability—or maybe the vulnerability comes first—from all parties.

The formal Assessment for Learning Project is almost 3 years old. CCE's Quality Performance Assessment has existed in its present form for over six years. We've learned again and again that even the best techniques of assessment are not sufficient for a vital assessment for learning culture. For the deep culture change—for the shift in values, ethics, and pedagogy—that is assessment for learning, these five qualities are non-negotiable.

This blog was originally published in Education Week’s Next Gen Learning in Action Blog

3 Months, 3 Micro-credentials, 1 Teacher: Building Performance Assessment Capacity in Georgia

“Starting a new initiative at a school can be time-consuming and challenging because it requires asking people to change their current practice in ways that may be unfamiliar or uncomfortable. For this project, I had to get each stakeholder—the principal, teachers, and others—on board with performance assessment, a different way to measure student learning.” Kimberly Sheppard

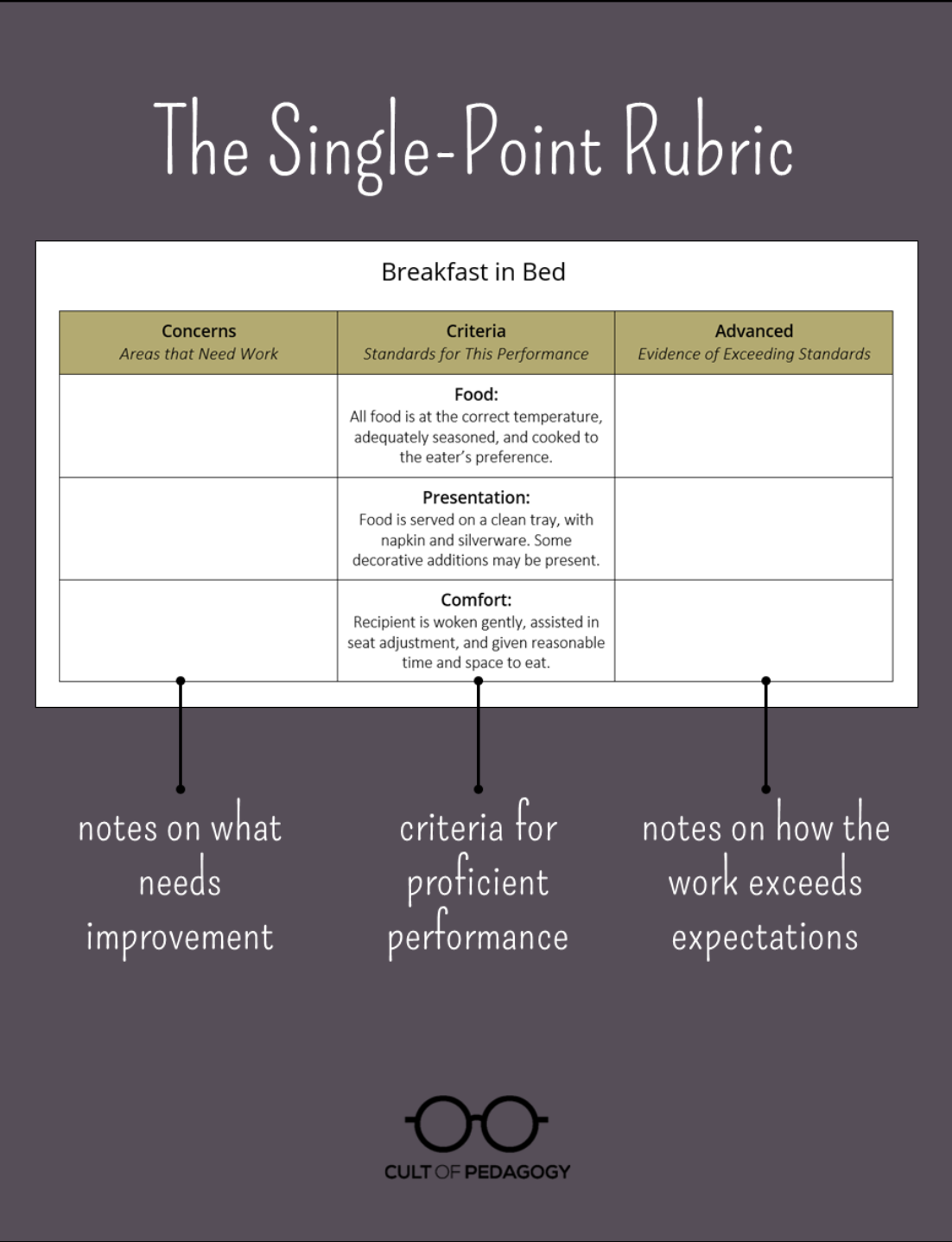

It's Time We Talked About Rubrics

“We have been using the four-point rubric with nearly religious consistency for more than a dozen years. Time has passed. Revisiting the idea of the rubric to see how well it has worked and how it can better serve our students is a legitimate and worthwhile exercise. It’s a conversation we should all be having.”